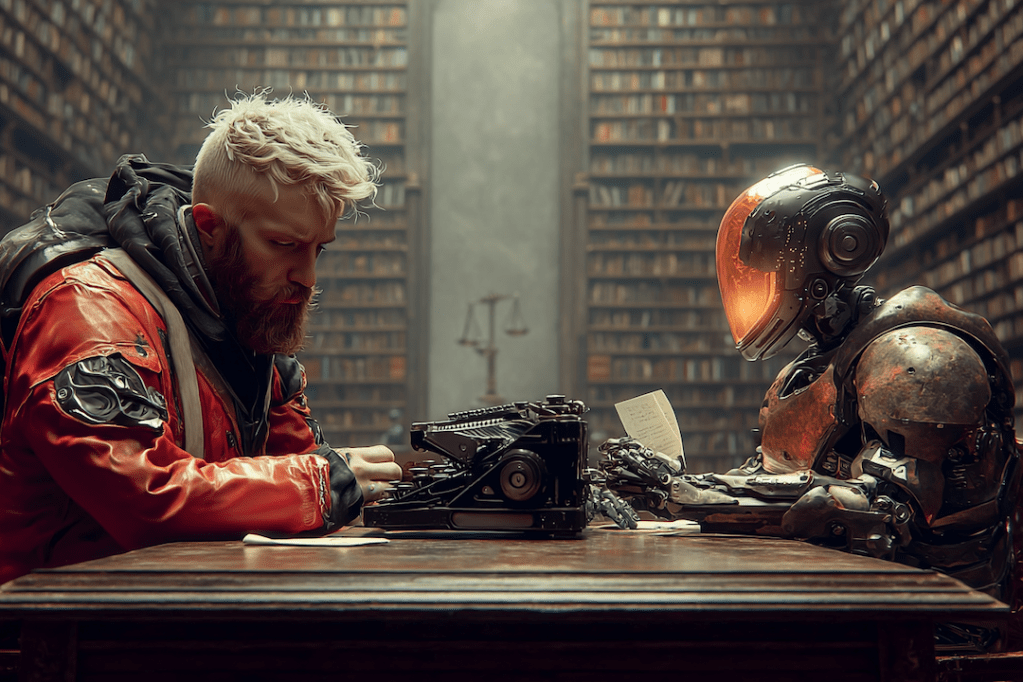

The Legal Storm That Could Reshape the Industry

There’s a legal storm brewing in the world of artificial intelligence, and it might reshape the landscape more than any model update ever could.

Anthropic, the company behind Claude, is currently under scrutiny for training its models using massive online libraries, some allegedly pirated. The legal implications? Huge. If the courts rule that AI companies must retrospectively pay for the use of copyrighted content, the impact won’t just ripple, it’ll surge through the industry.

Here’s what’s likely to happen:

- Subscription prices soar, and free access disappears

- Indie AI startups collapse under the financial weight

- Innovation slows down as models are retrained on cleaner, licensed data, or not trained at all

- Big players consolidate even more control, pushing smaller voices out of the space

And as someone who’s been building in the background, developing agents, testing frameworks, and exploring the limits of this tech, I’m torn.

I’m Hopeful. But Nervous.

Hopeful, because maybe this finally pushes the industry to evolve ethically. Authors and publishers may be on the verge of becoming co-creators in this space, offering a future where consent and collaboration shape what’s next.

But nervous, too.

Because if access becomes a luxury, micro-innovators like me are priced out of the future we helped build. If I can’t afford to test or experiment, my growth as a developer stalls. And that’s not just my loss—it’s a loss for the AI community as a whole.

Innovation Needs Tinkerers

Progress in AI didn’t come from polished boardrooms, it came from tinkerers. From people pushing tools to the edge.

Quantum computing emerged because we needed it.

Machine learning leapt forward because people poked and prodded at possibility.

If we lose open access, we lose the spirit that made AI exciting in the first place.

We Need More Than Better Models. We Need Better Agreements.

This isn’t the end of the AI revolution, it’s a reckoning. And it’s long overdue.

There’s a difference between carving new paths and cutting corners. Indie builders, tinkerers, and researchers have expanded what’s possible with the tools we were given. That’s not exploitation, it’s exploration.

But some didn’t just explore. They extracted. Quietly, and without consent. Now the cracks are showing.

We don’t need to halt innovation. We need to clarify the rules of the road.

A scalpel in the right hands can heal, and in the wrong hands, harm. The same goes for AI. It’s not the tool that’s the problem. It’s how we wield it.

So let’s build better tools, yes—but also better trust. Let’s shape a future where creativity, transparency, and collaboration can coexist.

The industry doesn’t need to collapse.

It just needs to evolve, with everyone at the table.

Written by Mitch

Creative AI Strategist | Hospitality x Automation Specialist | Human-First Systems Builder

Still building. Still dreaming. Still in my oodie.